The art of a great painting is not in any one idea, nor in a multitude of separate tricks for placing all those pigment spots, but in the great network of relationships among its parts.

Background

Finding a perfect alignment of multiple parties to achieve something cooperatively is non-trivial. It might become particularly hard when resolving critical and complex problems in an organization - normally the AI solutions require properly processed data, robust infrastructure framework, clear defined business objectives, talents, ethical concerns, responsibility for organizational and societal goods, etc. - all of these become the parts that require alignment and then cooperation.

The thing is, we may not often need a global alignment among the collaborators. And if there is an efficient way to achieve the goal just sufficiently, the extra efforts are unnecessary. This idea has been reflected by many theoretical research as well as pragmatic implementations. For instance, in the behavioral economics, incentivization is the key term to describe the reflective part of human cognitive system, where the analysis based on the available information to behave that maximizes the best interests in the outcome. And apparently, due to the differences of the individual natures, the best interests vary. And thus, the behavior of the individuals being exposed to even the same environment differ, too. And, if the overall organization asks these individuals with different interests to achieve the same goal, alignment is then needed. The AI researchers have interpreted the same concept into the contextual modelling or situated cognition to describe the reactions of an AI agent at the circumstances where the agent needs to maximizes the probability to achieve its goal.

Given the differences of the individuals’ nature, aligning all of the best interests take time, and the efforts for that may grow non-linearly with the number of parties involved and also the environmental factors that need to be considered. What is more, there is no strict need of such global alignment to achieve the goal, and sometimes, a bare minimum intersection of the common interests would boost the achievement, as long as these common interests can be at least proportionally projected onto the direction of the common goal.

A parent-child dilemma

An epic headache to all the parents is to find alignment with children on something that is viewed differently by adult and kid. For example, my wife or I often argue with my 3 year-old daughter about the screen time of TV, and this is what it usually happens.

- I

You have spent too much time on watching TV. You need to cut it down.

- My daughter

My friend (the boy lives next door) also watches TV and I want to watch it, too.

And the context for us is

- My context: worried about her eyesight, sleep, focus, and health.

- My daughter’s context: happy with cartoon, colorful scenes, characters, and stories to share with friends.

The incentive for us, respectively, are

- Care for my daughter to have a long-term healthy growth.

- Have an enjoyable period of time with the cartoon.

In this scenario, I think most of the parents would know the best strategy - use a timer. It does work and the thought process behind the approach is

- Make the objective of the problem specific - determine an optimal solution to the screen time for kids.

- Take the contextual information from both parties - worries about health and joy of watching cartoon.

- Find the incentives for both - watch less cartoon and watch more cartoon.

- Seek for an intersection to achieve the contextual alignment - agree on a time limit that both are happy with.

- Sometimes, handle the probabilistic exceptions with trade-offs - watch time on birthday can be prolonged.

The same observations can be found in more sophisticated scenarios, say alignment on strategy in a corporation, than just the screen time control at home. In the company, there may be multiple parties involved in one single project, where the stakeholders may have different perspectives in terms of KPI, resource investment, timeline, focus area, and last but not least, incentives. It is like a multi-agent system where each of the agents tries to optimize its local objective within the global constraints. And in such case, it is even more challenging than the screen time case to find a universal alignment, and an endless endeavor for such alignment may lead to delay or opportunity loss for the entire organization. Actually, most of times, the global alignment is not needed, because the common interest of the company plays an overwhelming role in determining the gain of the cooperation, and anything that is not positively correlated to that gain is not necessarily helpful despite the local benefits. This is due to the so-called bounded rationality effect formalized by Hebert Simon - people choose the “satisfying” results other than the “optimal” one due to the limited context. Hence, alignment is never global or universal, it is contextual and situated in tasks, data, constraints, incentives, and possibly many other factors in the environment.

Solution framework

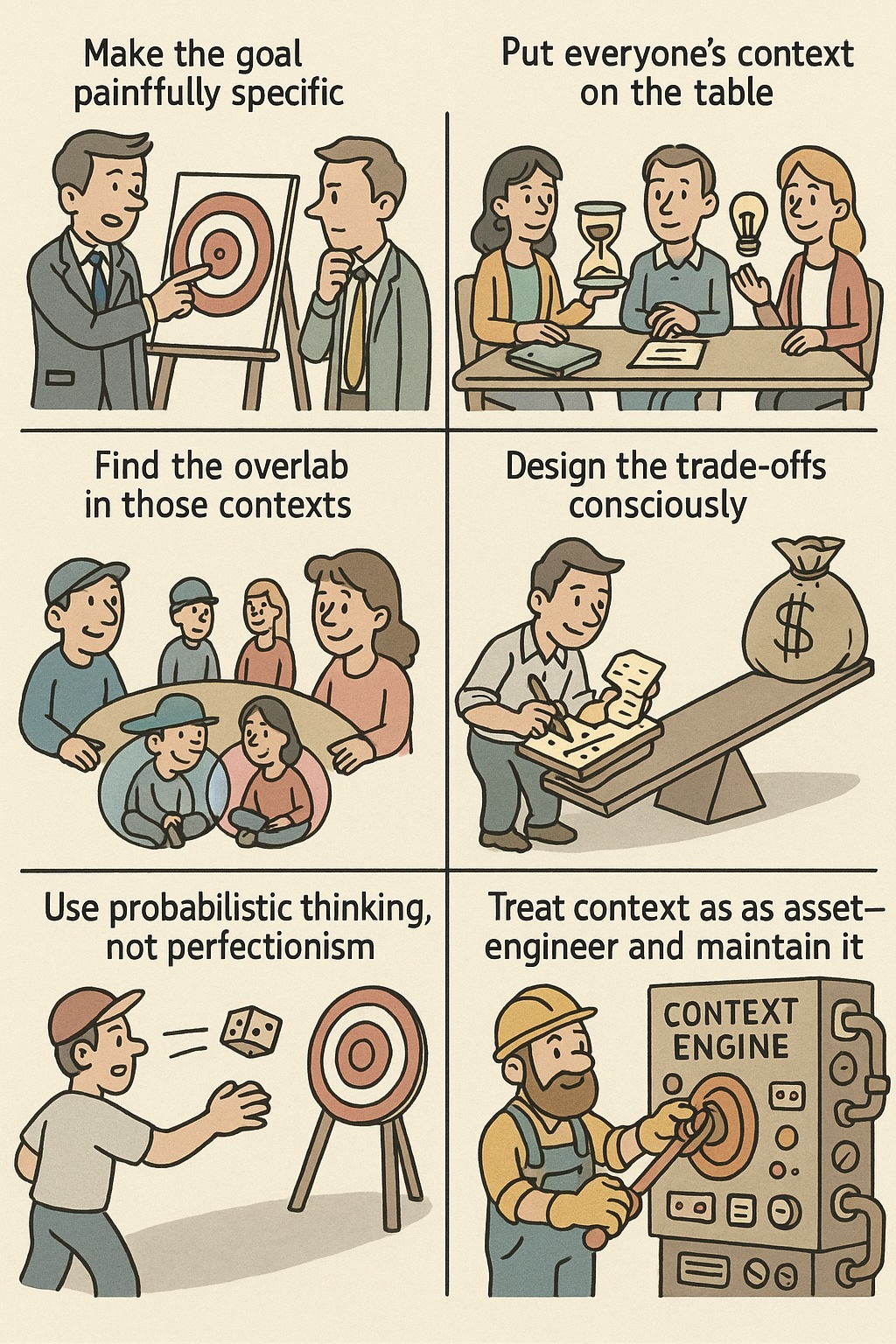

Finding the contextual alignment requires a mechanism design which define the structured operations which facilitate the system behavior in the desired manner despite the diversifying interests among different stakeholders in the system. Similar to the above example of parent-kid dilemma, the mechanism design can be formalized in several steps to align stakeholders partially without sacrificing the overall objective.

Make the goal specific

The starting point is actually where most people make mistakes. Setting a meaningful goal makes 50% of the success for the contextual alignment. However, setting a goal that does not convey the necessary information may kill the entire process. For example, thinking about a goal that is vague, purely qualitative, not informative, ambiguous, and difficult to understand by all of the stakeholders, it is challenging to proceed with any further alignment. So a good goal should be set properly, and this is based on the prerequisite that all of the stakeholders at least have the fundamental understanding of the global situation (note the awareness of the global situation is assumed to be the default knowledge basis of the stakeholders and it does not require any particular alignment).

Present everyone’s context with transparency

Context can be detailed into the aspects of incentives, risks, constraints, resources, time-aware objectives (short-term, mid-term, and long-term), etc., and the requirement for the further alignment is to present the context from each stakeholders with transparency. Strategically, the stakeholders may not always disclose the full-picture of their context or situation, so the degree of transparency for the context impact the quality of the alignment. In addition, there are weights of the different aspects for the context - for example, incentive may usually play the most vital role in the decision-making so it is weighed the highest. Risk, depends. Constraints, may vary across different parties. In general, the context presentation is key to the success of the alignment.

Find the intersection in the context.

The primary goal of alignment is to achieve the common objective under the intersection of the constraints, or broadly, the context. To find the intersection of the context is not difficult, given that the parties involved in the alignment transparently share the context. Like what Marvin Minsky argued in The Society of Mind, the alignment arises from constraint compatibility other than rational agreement. The intersection of context should not be based on preferences. Constraints may refer to the limit of budget, delivery deadline, overall ROI, etc., while preferences may refer to stylish concerns, individual limits, etc. Finding the commonality of the context take the constraints into account, such that the largest overlap in the conditions that allow the common goal to move is identified.

Use probabilistic thinking, not perfectionism.

Rationality is bounded. It is probabilistic at nature. In addition, it is time-bounded, too, which means, the same conclusion may not keep valid over time. This simply tells that, the alignment under the context does not have to be perfect to achieve the goal. Even if all the constraints are taken into account, it does not guarantee a success with 100% probability. Therefore, to align on a common goal should focus on the outcome that drives the improvement instead of a perfect plan that addresses every single problem.

Implement the interfaces with assumption for alignment.

If there is no standard interfaces, it would make the alignment hard to realize. The idea of achieving alignment through standardized interfaces rather than shared understanding appears independently across cognitive science, systems theory, organizational design, and software engineering. According to the well-known Conway’s Law, the organizational characteristics determine the implementation of the system. That is to say, the interfaces need to be implemented in a way that work the best towards the optimization of the alignment process. From the software engineering’s point of view, it requires the standardized data model, input, output, etc., to integrate the disconnected components with their local context. In the modern API-driven software architectures, the alignment scales only when interaction is constrained by explicit interfaces and assumptions - this is exactly what context engineering helps in the contemporary AI-enabled system, where the contextual artifacts are managed systematically for coordination.

Thoughts

In conclusion, alignment under context appears to be a vital mechanism in many circumstances. Alignment is not always an agreement. It requires the parties that are involved to transparently share the context (i.e., constraints) by using the standardized protocol, with which the largest intersection can be found to achieve the common goal. Inspired by the rationality theory, multi-agent system, cognitive architecture, etc., the contextual alignment helps organization, family (I am hopeful that my daughter understands what I am doing), and individual to navigate in the environment where the multi-party interactions are temporal, dynamic, and uncertain. Operating with harmony is not the key to accomplishing the goal - keeping a sustaining motion under the bounded optimality is what is sufficiently required.

References

- Herbert A. Simon (1947). Administrative Behavior. Free Press.

- Marvin Minsky (1986). The Society of Mind. Simon & Schuster.

- Stafford Beer (1974). Designing Freedom. Wiley.

- Melvin E. Conway (1968). “How Do Committees Invent?” Datamation, 14(4).

- Daniel Kahneman (2011). Thinking, Fast and Slow. Farrar, Straus and Giroux.

- Conway Melvin (1967). “Conway’s Law”.

- LangChain (2025), “Context Engineering”.

Citation

Plain citation as

Zhang, Le. Contextual Alignment. Thinkloud. https://yueguoguo.github.io/2025/12/22/contextual-alignment, 2025

or Bibliography-like citation

@article{yueguoguo2025contextualalignment,

title = “Contextual Alignment”,

author = “Zhang, Le”,

journal = “yueguoguo.github.io”,

year = “2025”,

month = “Dec”,

url = “https://yueguoguo.github.io/2025/12/22/contextual-alignment/”

}